你试一下用virt-manager -c qemu:///system和virt-manager --debug -c qemu:///system

virt-manager -c qemu:///system 没有报任何信息。

virt-manager --debug -c qemu:///system 执行后打印以下内容。

[四, 29 6月 2023 09:05:00 virt-manager 254860] DEBUG (cli:204) Version 4.1.0 launched with command line: /usr/bin/virt-manager --debug -c qemu:///system

[四, 29 6月 2023 09:05:00 virt-manager 254860] DEBUG (virtmanager:167) virt-manager version: 4.1.0

[四, 29 6月 2023 09:05:00 virt-manager 254860] DEBUG (virtmanager:168) virtManager import: /usr/share/virt-manager/virtManager

[四, 29 6月 2023 09:05:00 virt-manager 254860] DEBUG (virtmanager:205) PyGObject version: 3.42.2

[四, 29 6月 2023 09:05:00 virt-manager 254860] DEBUG (virtmanager:209) GTK version: 3.24.37

[四, 29 6月 2023 09:05:00 virt-manager 254860] DEBUG (systray:84) Imported AppIndicator3=<IntrospectionModule ‘AyatanaAppIndicator3’ from ‘/usr/lib/x86_64-linux-gnu/girepository-1.0/AyatanaAppIndicator3-0.1.typelib’>

[四, 29 6月 2023 09:05:00 virt-manager 254860] DEBUG (systray:86) AppIndicator3 is available, but didn’t find any dbus watcher.

[四, 29 6月 2023 09:05:00 virt-manager 254860] DEBUG (engine:244) Connected to remote app instance.

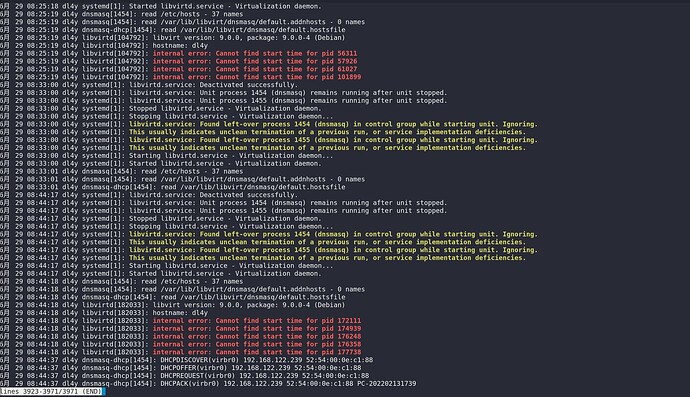

那你看看libvirtd的日志,用这个sudo journalctl -u libvirtd,然后按G拉到最下面,看看最近输出的日志。

sudo systemctl restart libvirtd

我在想,我重启这个服务后就能正常使用,如果有虚拟机在运行的话,也一直能连上——至少能保证5分钟以上的连接。

但是如果没有虚拟机运行,过两三分钟后,好像就连不上了。

难道是我系统或者是libvirt自己搞了什么省电、节能之类的措施,系统自动把它关掉了?

或者是像ssh那样,前端过几分钟没有响应,就断开了连接?

应该没有,目前 bookworm 和 testing 使用的 libvirt 和 virt-manager 版本是一样的,我这里就没有问题。

你的系统有跨版本升级吗?

我感觉好像是系统升级后才出现的。

是不是系统升级后重置了某些配置文件?

我在ArchWiki上看到了这个说法,但这个系统都用了一年多了,我忘了当年是怎么配置的了。

或者是哪个用户组把我踢出去了?

不管怎么说还是谢谢你了,陪我调试了这么久。

我先用 sudo systemctl restart libvirtd 临时顶着吧。

后续升级万一解决了我再上来回答。

没有,我重装的 bookworm。

感觉这应该是个 bug,不是配置问题。

我也觉得是。

但是日志里面它不报错啊。

我也不想重装系统,其实之前我很想把相关系统配置文件全删除了重新运行的,不过用重启服务的方法能解决,我就不搞这么麻烦了。

我想看服务被重启之前的日志。前边有图虽然贴出了不少日志,但是无法判断哪些是正常时候的、哪些是出问题的时候的。

我上面贴的都是连接不上时的指令。

现在我机器上正好也处于连接不上。

我要用什么指令来打印出你需要的日志呢?@lilydjwg

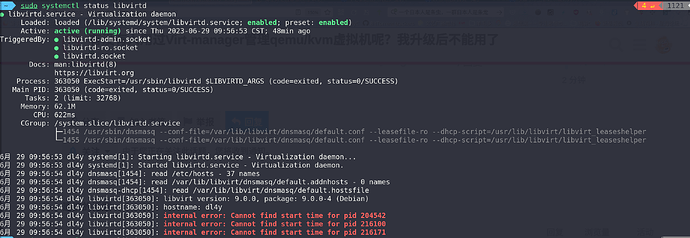

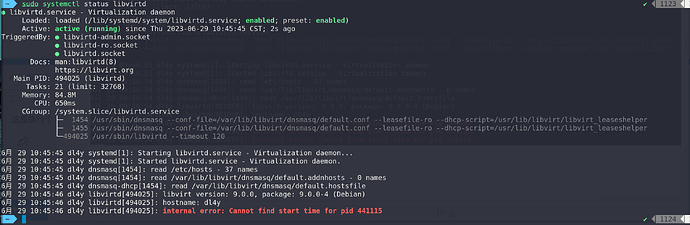

在连不上的时候执行 systemctl status libvirt 看看。

如果你 restart 服务之后又能连上了,那就再执行一次看看,对比一下。

有点神奇。我去拆个 deb 包看看……

是不是两分钟内没有连的话它就会连不上?

具体时间没算,差不多就是这样子,现在就又连不上了。

systemctl cat libvirtd 看看?

执行sudo systemctl cat libvirtd

这是连不上的日志。

# /lib/systemd/system/libvirtd.service

[Unit]

Description=Virtualization daemon

Requires=virtlogd.socket

Requires=virtlockd.socket

# Use Wants instead of Requires so that users

# can disable these three .socket units to revert

# to a traditional non-activation deployment setup

Wants=libvirtd.socket

Wants=libvirtd-ro.socket

Wants=libvirtd-admin.socket

Wants=systemd-machined.service

After=network.target

After=firewalld.service

After=iptables.service

After=ip6tables.service

After=dbus.service

After=iscsid.service

After=apparmor.service

After=local-fs.target

After=remote-fs.target

After=systemd-logind.service

After=systemd-machined.service

After=xencommons.service

Conflicts=xendomains.service

Documentation=man:libvirtd(8)

Documentation=https://libvirt.org

[Service]

Type=notify

Environment=LIBVIRTD_ARGS="--timeout 120"

EnvironmentFile=-/etc/default/libvirtd

ExecStart=/usr/sbin/libvirtd $LIBVIRTD_ARGS

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

# At least 1 FD per guest, often 2 (eg qemu monitor + qemu agent).

# eg if we want to support 4096 guests, we'll typically need 8192 FDs

# If changing this, also consider virtlogd.service & virtlockd.service

# limits which are also related to number of guests

LimitNOFILE=8192

# The cgroups pids controller can limit the number of tasks started by

# the daemon, which can limit the number of domains for some hypervisors.

# A conservative default of 8 tasks per guest results in a TasksMax of

# 32k to support 4096 guests.

TasksMax=32768

# With cgroups v2 there is no devices controller anymore, we have to use

# eBPF to control access to devices. In order to do that we create a eBPF

# hash MAP which locks memory. The default map size for 64 devices together

...skipping...

Wants=libvirtd-admin.socket

Wants=systemd-machined.service

After=network.target

After=firewalld.service

After=iptables.service

After=ip6tables.service

After=dbus.service

After=iscsid.service

After=apparmor.service

After=local-fs.target

After=remote-fs.target

After=systemd-logind.service

After=systemd-machined.service

After=xencommons.service

Conflicts=xendomains.service

Documentation=man:libvirtd(8)

Documentation=https://libvirt.org

[Service]

Type=notify

Environment=LIBVIRTD_ARGS="--timeout 120"

EnvironmentFile=-/etc/default/libvirtd

ExecStart=/usr/sbin/libvirtd $LIBVIRTD_ARGS

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

# At least 1 FD per guest, often 2 (eg qemu monitor + qemu agent).

# eg if we want to support 4096 guests, we'll typically need 8192 FDs

# If changing this, also consider virtlogd.service & virtlockd.service

# limits which are also related to number of guests

LimitNOFILE=8192

# The cgroups pids controller can limit the number of tasks started by

# the daemon, which can limit the number of domains for some hypervisors.

# A conservative default of 8 tasks per guest results in a TasksMax of

# 32k to support 4096 guests.

TasksMax=32768

# With cgroups v2 there is no devices controller anymore, we have to use

# eBPF to control access to devices. In order to do that we create a eBPF

# hash MAP which locks memory. The default map size for 64 devices together

# with program takes 12k per guest. After rounding up we will get 64M to

# support 4096 guests.

LimitMEMLOCK=64M

[Install]

WantedBy=multi-user.target

Also=virtlockd.socket

Also=virtlogd.socket

Also=libvirtd.socket

Also=libvirtd-ro.socket

用代码块,不要用引用……